AI Multi Tool Search Assistant

AI Multi Tool Search Assistant

Multi-source search integration with locally hosted LLM (llama3.2:3b) and various search tools [Math tools, Tavily Search, WikipediaQueryRun, DuckDuckGoSearchRun, PubmedQueryRun]

Pre-Requisites

Required Python packages:

- streamlit:

pip install streamlit - langchain:

pip install langchain - requests:

pip install requests - python-dotenv:

pip install python-dotenv - Tavily:

pip install tavily-python

Additional requirements:

- Ollama server running with llama3.2:3b model

- Tavily API key

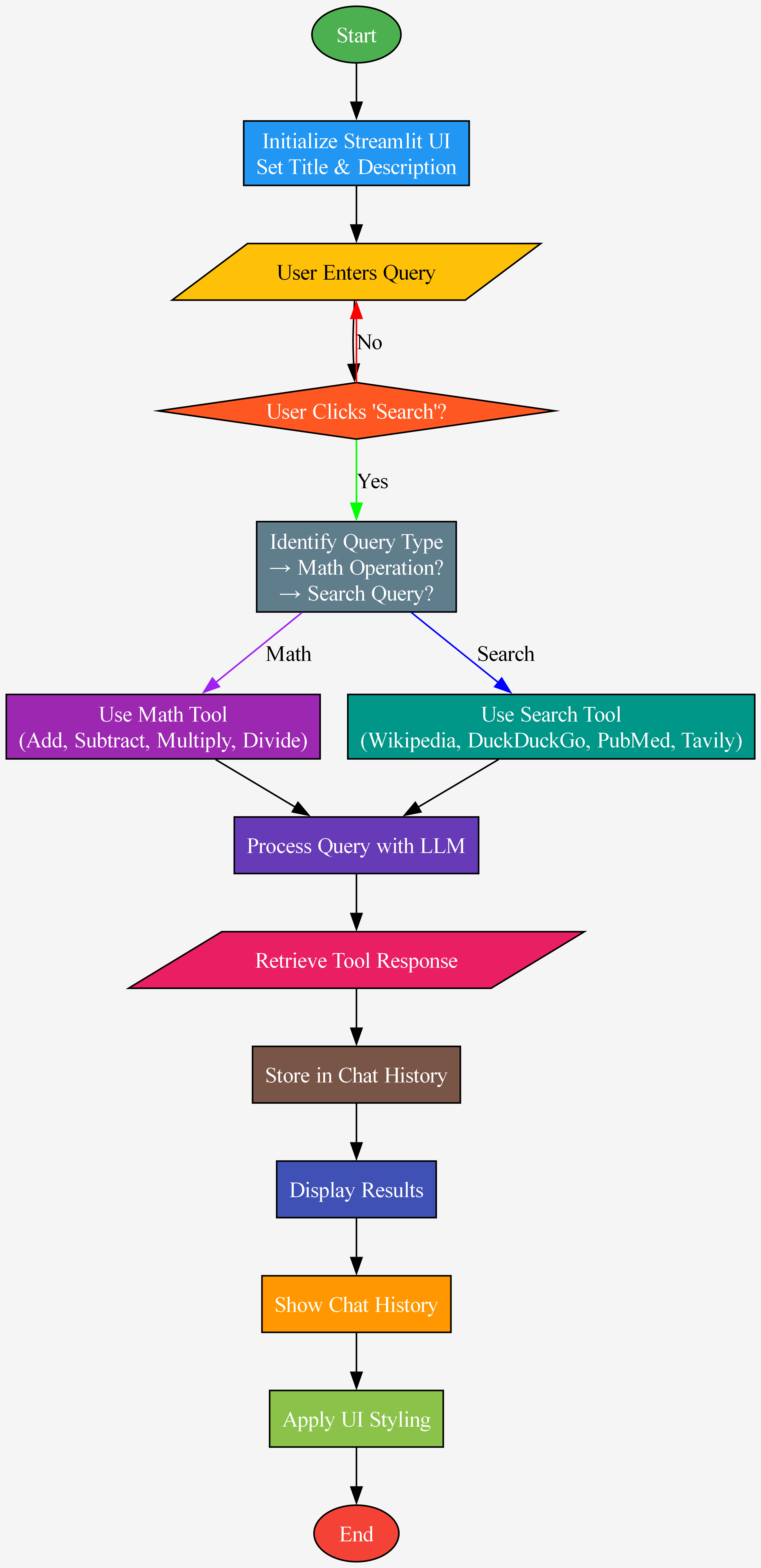

System Architecture

The application implements a multi-tool search pipeline:

- Tool Selection: Automatic tool routing based on query context

- Search Tools Integration: Tavily, Wikipedia, DuckDuckGo, and PubMed

- Math Operations: Built-in arithmetic functions

- Chat History: Persistent conversation memory

- LLM Integration: Local Ollama instance for decision making

Key Components Explained

1. Search Tool Integration

The AI Multi Tool Search Assistant utilizes various external APIs and built-in tools to fetch relevant search results efficiently. Below are the core search integrations:

📖 Wikipedia Search

Uses Wikipedia as a knowledge base for general information retrieval.

@tool

def wikipedia_search(query: str) -> str:

"""Search Wikipedia for general information."""

return WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper()).invoke(query)🩺 PubMed Search

Fetches scientific and medical research information from PubMed.

@tool

def pubmed_search(query: str) -> str:

"""Search PubMed for medical, medicines, and life sciences queries."""

return PubmedQueryRun().invoke(query)🌐 Tavily Real-Time Web Search

Performs real-time searches using the Tavily API to retrieve current information on topics like stock market trends and weather updates.

@tool

def tavily_search(query: str) -> str:

"""Search web for real-time information, stock market, current weather, etc. using Tavily."""

return tavily_web_search(query)📰 DuckDuckGo Search

Uses DuckDuckGo's search engine to retrieve results related to breaking news, politics, sports, and other trending topics.

@tool

def duckduckgo_search(query: str) -> str:

"""Perform a web search using DuckDuckGo for queries related to current news, politics, sports, etc."""

return DuckDuckGoSearchRun().invoke(query)Each of these tools plays a key role in making the AI Multi Tool Search Assistant a powerful and versatile query-processing system.

2. Math Operations

The assistant also includes built-in mathematical tools for performing arithmetic operations such as addition, subtraction, multiplication, and division.

➕ Addition

Adds two integers together.

@tool

def add(a: int, b: int) -> int:

"""Add two integers together."""

return a + b✖️ Multiplication

Multiplies two integers together.

@tool

def multiply(a: int, b: int) -> int:

"""Multiply two integers together."""

return a * b➖ Subtraction

Subtracts one integer from another.

@tool

def subtract(a: int, b: int) -> int:

"""Subtract integers."""

return a - b➗ Division

Divides one integer by another.

@tool

def divide(a: int, b: int) -> int:

"""Divide integers."""

return a / bThese mathematical operations are implemented as LangChain tools, allowing seamless execution within the assistant's workflow.

3. Tool Routing System

llm_search = llm.bind_tools(all_tools)

response = llm_search.invoke(messages)LLM-powered automatic tool selection based on query context.

User Interface Components

1. Search Input

query = st.text_area("Enter your search query:", height=150)Large text area for complex queries with markdown support.

2. Results Display

st.write(f"**🔧 Tool Used:** {data['tool']}")

st.write(f"**📄 Response:** {data['response']}")Formatted results display with tool attribution.

3. Chat History

with st.expander(f"🗂 Query: {msg['query']}", expanded=True):

# History display implementationCollapsible conversation history with persistent storage.

Complete Implementation: multi_tool_search_assistant.py

"""

Copyright (c) 2025 AI Leader X (aileaderx.com). All Rights Reserved.

This software is the property of AI Leader X. Unauthorized copying, distribution,

or modification of this software, via any medium, is strictly prohibited without

prior written permission. For inquiries, visit https://aileaderx.com

"""

import streamlit as st

import requests

import json

from dotenv import load_dotenv

from langchain_ollama import ChatOllama

from langchain_core.tools import tool

from langchain_community.tools import WikipediaQueryRun, DuckDuckGoSearchRun, PubmedQueryRun

from langchain_community.utilities import WikipediaAPIWrapper

from langchain_core.messages import HumanMessage, AIMessage

# ----------------------------

# Initialize Streamlit UI

# ----------------------------

st.set_page_config(page_title="AI Multi Tool Search Assistant", layout="wide")

# ----------------------------

# Title and Description

# ----------------------------

st.title("🔍 AI Multi Tool Search Assistant")

st.markdown("Enter your query below and the AI will fetch the best results using appropriate tools [Math tools, Tavily Search, WikipediaQueryRun, DuckDuckGoSearchRun, PubmedQueryRun]")

# ----------------------------

# Initialize LLM (Ollama Model)

# ----------------------------

llm = ChatOllama(model='llama3.2:3b', base_url='http://localhost:11434')

# ----------------------------

# Tavily Search Function

# ----------------------------

def tavily_web_search(query):

API_URL = "https://api.tavily.com/search"

API_KEY = "tvly-dev-.............AHwdV" # Replace with your actual API key

payload = {

"query": query,

"topic": "general",

"search_depth": "basic",

"max_results": 3,

"include_answer": True

}

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

try:

response = requests.post(API_URL, json=payload, headers=headers, timeout=10)

response.raise_for_status()

data = response.json()

result_text = f"**Query:** {data.get('query', 'N/A')}\n\n"

if "answer" in data and data["answer"]:

result_text += f"**Answer:** {data['answer']}\n\n"

results = data.get("results", [])

if results:

result_text += "**Top Search Results:**\n\n"

for i, result in enumerate(results, start=1):

title = result.get("title", "No Title")

url = result.get("url", "No URL")

content = result.get("content", "No content available")[:150]

result_text += f"**{i}. [{title}]({url})**\n{content}...\n\n"

return result_text

except requests.exceptions.RequestException as e:

return f"Error fetching results: {e}"

# ----------------------------

# Custom Math Tools

# ----------------------------

@tool

def add(a: int, b: int) -> int:

"""Add two integers together."""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiply two integers together."""

return a * b

@tool

def subtract(a: int, b: int) -> int:

"""Subtract integers."""

return a - b

@tool

def divide(a: int, b: int) -> int:

"""Divide integers."""

return a / b

# ----------------------------

# Search Tools

# ----------------------------

@tool

def wikipedia_search(query: str) -> str:

"""Search Wikipedia for general information."""

return WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper()).invoke(query)

@tool

def pubmed_search(query: str) -> str:

"""Search PubMed for medical, medicines and life sciences queries."""

return PubmedQueryRun().invoke(query)

@tool

def tavily_search(query: str) -> str:

"""Search web for real-time information, stock market, current weather etc. using Tavily."""

return tavily_web_search(query)

@tool

def duckduckgo_search(query: str) -> str:

"""Perform a web search using DuckDuckGo for query related to current news, politics, sports etc"""

return DuckDuckGoSearchRun().invoke(query)

# ----------------------------

# Initialize chat history

# ----------------------------

if "messages" not in st.session_state:

st.session_state.messages = []

# ----------------------------

# User Input

# ----------------------------

query = st.text_area(

"Enter your search query:",

key="query",

help="Type your question here",

height=150 # Adjust the height (in pixels) as needed

)

# ----------------------------

# Search Execution

# ----------------------------

if st.button("Search"):

if query:

# Math tools

math_tools = [add, subtract, multiply, divide]

math_tool_map = {t.name: t for t in math_tools}

# Search tools

search_tools = [duckduckgo_search, wikipedia_search, pubmed_search, tavily_search]

search_tool_map = {t.name: t for t in search_tools}

# Combine all tools

tool_map = {**search_tool_map, **math_tool_map}

all_tools = math_tools + search_tools

# Bind tools to LLM

llm_search = llm.bind_tools(all_tools)

# Process query

messages = [HumanMessage(query)]

response = llm_search.invoke(messages)

# Display tool usage and results

response_data = []

for tool_call in response.tool_calls:

tool_name = tool_call['name']

args = tool_call['args']

tool = tool_map[tool_name]

result = tool.invoke(args)

response_data.append({"tool": tool_name, "response": result})

# Append response to chat history

st.session_state.messages.append(

{"query": query, "tool": tool_name, "response": result}

)

# Display tool responses

if response_data:

for data in response_data:

#st.write(f"**🔧 Data:** {data}")

st.write(f"**🔧 Tool Used:** {data['tool']}")

st.write(f"**📄 Response:** {data['response']}")

else:

st.warning("No tool was used or no response was generated.")

# ----------------------------

# Display Chat History

# ----------------------------

st.subheader("📜 Chat History")

if st.session_state.messages:

for msg in st.session_state.messages[::-1]: # Show latest first

with st.expander(f"🗂 Query: {msg['query']}", expanded=True):

st.write(f"**🔧 Tool Used:** {msg['tool']}")

st.write(f"**📄 Response:** {msg['response']}")

else:

st.info("No search history yet. Start by entering a query!")

# ----------------------------

# Styling for Better UI

# ----------------------------

st.markdown(

"""

""",

unsafe_allow_html=True

)

)Running the Application

streamlit run multi_tool_search_assistant.pyAccess the interface at:

- Local URL: http://localhost:8501

- Network URL: http://[your-ip]:8501

Required services:

- Ollama server running on port 11434

- Internet connection for API access

Conclusion

The AI Multi-Tool Search Assistant is a powerful, context-aware query processing system designed for seamless integration of multiple search and computational tools. It offers:

- Intelligent Tool Selection: Dynamically selects the most appropriate tool based on the query type.

- Hybrid Search Capabilities: Combines real-time web search (DuckDuckGo, Tavily) with academic knowledge sources (Wikipedia, PubMed) for comprehensive results.

- Mathematical Computation Support: Includes built-in tools for performing addition, subtraction, multiplication, and division.

- Persistent Conversation History: Maintains a structured chat history, allowing users to track previous searches and responses.

- Scalable & Extensible Architecture: Supports easy addition of new tools and seamless integration with enterprise knowledge bases.

Built with Streamlit, the application provides an interactive and user-friendly web interface. By leveraging LangChain’s tool-binding capabilities, it intelligently processes user queries, ensuring accurate and efficient results.

This system serves as a highly adaptable AI-powered search framework, bridging general knowledge, academic research, and computational intelligence within a single, scalable platform.

Sequence Flow of AI Multi Tool Search Assistant

AI Multi Tool Search Assistant - Streamlit UI

Reference Links

- Streamlit: Streamlit Official Site

- Ollama Installation on Local Host: Ollama

- Running Llama 3.2 on Local Host using Ollama: Ollama Documentation

- LangChain Tools Documentation: LangChain Tools

- Wikipedia API Wrapper: LangChain Wikipedia

- DuckDuckGo Search API: DuckDuckGo Search

- PubMed API: PubMed API

- Tavily Search API: Tavily API Documentation