LangGraph Based Tool-Using Agent with Local Meta-LLaMA-3 (LM Studio)

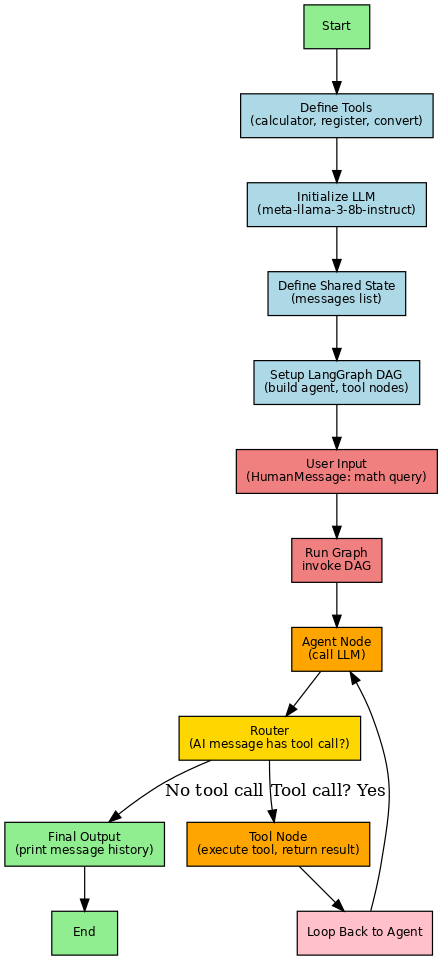

LangGraph Code Agent Flow

[ Building a Dynamic Tool-Based Agent Loop with LangGraph + LangChain ]

LangGraph Overview

LangGraph is a cutting-edge orchestration framework that empowers developers to build dynamic, stateful workflows using a graph-based abstraction over Large Language Models (LLMs). It is a powerful extension of the LangChain ecosystem and is ideal for implementing intelligent agentic systems with conditional routing, iterative reasoning, and memory-based transitions.

At the heart of LangGraph is its DAG (Directed Acyclic Graph) structure, where nodes represent functional blocks (like LLM agents, tools, or decision routers) and edges represent transitions based on the system's evolving state. This makes it an ideal choice for implementing workflows like tool-augmented agents, iterative Q&A bots, reasoning chains, and decision trees powered by LLMs.

Key Highlights of LangGraph:

- 🔄 StateGraph API: A high-level API to define states, transitions, and node logic in a composable and scalable manner.

- 🧠 Shared Typed State: Uses Python’s type annotations to enforce schema correctness, ensuring the state transitions and outputs remain consistent across the graph.

- 🛠️ Tool Integration: LangGraph works seamlessly with LangChain tools and OpenAI tool-calling protocols. This enables conditional execution of tools like calculators, web scrapers, or vector search engines directly within the graph.

- 🧭 Conditional Routing: Nodes can branch to different paths based on LLM output or tool results—ideal for complex decision trees and agent loops.

- ♻️ Looping Support: Enables feedback loops where the LLM can reflect, retry, or re-route based on tool results or custom logic—key for agent refinement and autonomous planning.

- 🌐 OpenAI-Compatible: Fully supports OpenAI's tool-calling schema and can integrate with both cloud-based and local LLMs (e.g., vLLM, LM Studio, Ollama).

- 📈 Production Ready: Supports asynchronous execution, advanced logging, observability, and can be deployed in production using Python’s asyncio or distributed task systems.

LangGraph empowers developers, AI engineers, and automation architects to move beyond single-turn prompts and build sophisticated LLM workflows that reason, plan, and adapt based on dynamic input and execution context. Whether you're building a multimodal agent, a document Q&A pipeline, or a full-fledged reasoning system, LangGraph offers the structure and flexibility you need.

LangGraph Agent Flow – Architecture

The LangGraph agent flow demonstrates a structured loop where a local LLM agent interacts with tools using a directed graph-based approach. This architecture efficiently manages state, decisions, and tool usage across modular nodes, ideal for building intelligent and extensible LLM applications.

🧠 Core Flow Logic:

- Human Input: A user asks a question, such as

"What is 21 multiplied by 2?". - Agent Node: The LLM model (e.g., Meta-Llama-3 via vLLM or LM Studio) analyzes the input and decides whether a tool is needed.

- Tool Router: The system inspects if the AI's response includes a

tool_call. If yes, it transitions to the corresponding tool node. - Tool Node: The requested tool (like a calculator) is executed and its result is encapsulated as a

ToolMessage. - Return to Agent: The result is fed back into the graph where the agent uses it to generate the final output or continue interaction.

- End State: If no further tool call is detected, the graph exits and the final response is returned to the user.

💡 Design Strengths

- Modularity: Each node (agent, tool, router) is reusable, testable, and independent.

- Adaptive Looping: Agent–Tool cycles can repeat based on need, enabling iterative reasoning and feedback refinement.

- Graph-Driven Execution: LangGraph manages transitions and shared state, eliminating complex if-else logic.

- OpenAI-Compatible Tool Calling: Supports structured tool usage and argument parsing as per OpenAI schema.

🗺️ Code Flow Diagram

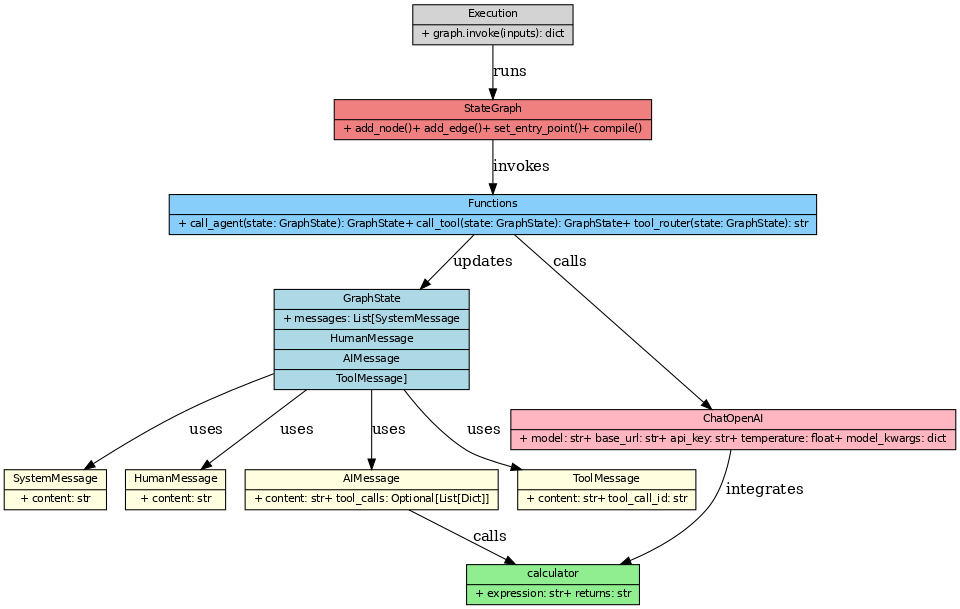

Below is the high-level code flow diagram showing how LangGraph coordinates agent, tool, router, and shared state:

📦 Class Diagram

The following diagram outlines the class-level structure of the LangGraph agent system, including key entities such as GraphState, call_agent, and tool_registry:

🛠️ Pre-Requisites

1. Running Meta-LLaMA-3 Locally via LM Studio

To run this LangGraph-based agent flow, ensure that LM Studio is up and serving the meta-llama-3-8b-instruct model. LM Studio provides an OpenAI-compatible REST API that seamlessly integrates with LangChain and LangGraph.

- Model:

meta-llama-3-8b-instruct - LLM Server URL:

http://192.168.37.1:1234/v1 - API Key: Not required (use dummy value like

"not-needed")

Test your LM Studio instance by visiting: http://192.168.37.1:1234/docs

2. Python Environment Setup

It is recommended to use a virtual environment for your LangGraph development to manage dependencies cleanly.

# (Optional) Create and activate a virtual environment

conda create -n langgraph_env python=3.11 -y

conda activate langgraph_env

3. Required Python Packages

Install the following Python libraries:

pip install langchain

pip install langgraph

pip install langchain_openai

pip install typing-extensions

With the model running and these packages installed, you’re ready to execute the LangGraph DAG with tool-calling enabled.

Complete Code: LangGraph Agent Flow

This Python code defines a LangGraph flow that integrates an LLM with tool calling, conditional routing, and state-based transitions.

"""

Copyright (c) 2025 AI Leader X (aileaderx.com). All Rights Reserved.

This software is the property of AI Leader X. Unauthorized copying, distribution,

or modification of this software, via any medium, is strictly prohibited without

prior written permission. For inquiries, visit https://aileaderx.com

"""

# -------------------------

# Imports

# -------------------------

# LangGraph core for graph-based agent flow

from langgraph.graph import StateGraph, END

# LangChain core message types

from langchain_core.messages import HumanMessage, AIMessage, ToolMessage, SystemMessage

# LangChain tool definition decorator

from langchain_core.tools import tool

# Utility to convert LangChain tools into OpenAI-compatible schema

from langchain_core.utils.function_calling import convert_to_openai_tool

# OpenAI-compatible Chat Model (backed by local LLM endpoint like LM Studio)

from langchain_openai import ChatOpenAI

# Typing helpers for state annotation

from typing import Annotated, TypedDict, List, Union

import logging

# -------------------------

# 1. TOOL DEFINITIONS

# -------------------------

@tool

def calculator(expression: str) -> str:

"""

A basic calculator tool that evaluates mathematical expressions.

Example input: '2 + 2', '5 * 10'

"""

print("🧮 Evaluating expression:", expression)

logging.info(f"Evaluating: {expression}")

try:

return str(eval(expression)) # Use Python's eval for quick arithmetic (not safe for untrusted input)

except Exception as e:

return f"Error evaluating expression: {str(e)}"

# Register tools and convert them to OpenAI-compatible schema

tools = [calculator]

openai_tools = [convert_to_openai_tool(tool) for tool in tools] # Needed for models that support OpenAI-style tool calling

tool_registry = {tool.name: tool for tool in tools} # Mapping tool name → function reference

# -------------------------

# 2. LLM Setup (LM Studio or other local OpenAI-compatible API)

# -------------------------

llm = ChatOpenAI(

model="meta-llama-3-8b-instruct", # Local model name

base_url="http://192.168.224.1:1234/v1", # Local LLM server URL (LM Studio/vLLM/etc.)

api_key="not-needed", # Dummy API key (not used locally)

temperature=0.2, # Lower temperature for deterministic output

model_kwargs={

"tools": openai_tools, # List of tools available to model

"tool_choice": "auto" # Let model decide when to call a tool

}

)

# -------------------------

# 3. LangGraph Shared State Definition

# -------------------------

class GraphState(TypedDict):

# Shared state for LangGraph. Tracks all messages in the conversation.

messages: Annotated[List[Union[SystemMessage, HumanMessage, AIMessage, ToolMessage]], "messages"]

# -------------------------

# 4. Agent Node Function

# -------------------------

def call_agent(state: GraphState) -> GraphState:

"""

Invokes the LLM agent with the current message history.

"""

print("🤖 Agent invoked")

try:

response = llm.invoke(state["messages"]) # Pass full message history to the LLM

print("🤖 Agent response:", response.content)

return {"messages": state["messages"] + [response]} # Append LLM response to message history

except Exception as e:

error_msg = f"Agent invocation failed: {str(e)}"

print(f"❌ {error_msg}")

return {"messages": state["messages"] + [AIMessage(content=error_msg)]}

# -------------------------

# 5. Tool Node Function

# -------------------------

def call_tool(state: GraphState) -> GraphState:

"""

Executes the tool requested by the LLM and returns its result.

"""

print("🛠️ Tool node triggered")

messages = state["messages"]

last_msg = messages[-1] # Get last AI message that might have a tool call

if not hasattr(last_msg, 'tool_calls') or not last_msg.tool_calls:

print("⚠️ No tool calls in last message")

return {"messages": messages}

# Extract first tool call from AIMessage

tool_call = last_msg.tool_calls[0]

name = tool_call.get("name")

args = tool_call.get("args", {})

print(f"🔧 Tool Call: {name} | Args: {args}")

# Validate tool existence

if name not in tool_registry:

error_msg = f"Tool {name} not found in registry"

print(f"❌ {error_msg}")

return {"messages": messages + [ToolMessage(tool_call_id=tool_call["id"], content=error_msg)]}

try:

# Invoke the tool using registered function

output = tool_registry[name].invoke(args)

print("✅ Tool Output:", output)

return {"messages": messages + [ToolMessage(tool_call_id=tool_call["id"], content=str(output))]}

except Exception as e:

error_msg = f"Tool execution error: {str(e)}"

print(f"❌ {error_msg}")

return {"messages": messages + [ToolMessage(tool_call_id=tool_call["id"], content=error_msg)]}

# -------------------------

# 6. Router Function

# -------------------------

def tool_router(state: GraphState) -> str:

"""

Determines whether to route to 'tool' node or end the flow.

If last AI message contains tool call → go to tool node, else end.

"""

last_msg = state["messages"][-1]

if isinstance(last_msg, AIMessage) and hasattr(last_msg, 'tool_calls') and last_msg.tool_calls:

print("📡 Routing to tool node")

return "tool"

print("📡 Routing to end node")

return "end"

# -------------------------

# 7. LangGraph DAG Setup

# -------------------------

# Initialize LangGraph with shared state definition

builder = StateGraph(GraphState)

# Define graph nodes and their functions

builder.add_node("agent", call_agent)

builder.add_node("tool", call_tool)

# Set the start of the graph

builder.set_entry_point("agent")

# Define dynamic transitions based on tool_router output

builder.add_conditional_edges("agent", tool_router, {"tool": "tool", "end": END})

# After tool is called, go back to agent to continue the loop

builder.add_edge("tool", "agent")

# Compile graph into runnable object

graph = builder.compile()

# -------------------------

# 7.1 Visualize LangGraph DAG

# -------------------------

try:

print("\n🖼️ LangGraph ASCII View:")

graph.get_graph().print_ascii() # Text-based view of the graph

graph.get_graph().draw_png("langgraph_dag.png") # Save DAG as PNG

print("✅ PNG DAG saved as: langgraph_dag.png")

# Optional: draw_svg

# graph.get_graph().draw_svg("langgraph_dag.svg")

# print("✅ SVG DAG saved as: langgraph_dag.svg")

except Exception as e:

print(f"⚠️ Graph visualization failed: {e}")

# -------------------------

# 8. Run the Graph

# -------------------------

if __name__ == "__main__":

# Define conversation input

inputs = {

"messages": [

SystemMessage(content="You are a math assistant. When asked to perform calculations, use the calculator tool."),

HumanMessage(content="What is 21 multiplied by 2? Please use the calculator tool.")

]

}

print("\n🚀 Running graph...\n")

result = graph.invoke(inputs) # Run the compiled LangGraph DAG with input state

# -------------------------

# 9. Final Output

# -------------------------

print("\n🧾 Final Conversation:")

for msg in result["messages"]:

print(f"{msg.type.upper()}: {msg.content}")

LangGraph Console Output

Below is the console output generated by the LangGraph DAG after executing the agent-tool workflow.

Download Console Output

LangGraph .PNG

Below is the .png generated for lang-graph.

Download LangGraph .PNG

Conclusion and Future Enhancements

This LangGraph-based implementation demonstrates how modern LLM agents can interact dynamically with tools (like calculators or external APIs) through a modular, stateful graph architecture. By structuring the flow using LangGraph’s StateGraph API, the system ensures interpretability, traceability, and deterministic control across complex decision paths.

LangGraph natively integrates with LangChain-compatible models and tools—including those served locally through OpenAI-style APIs (e.g., LM Studio or vLLM). It empowers developers to move beyond linear prompt-response interactions and build intelligent, reactive, and multi-turn agentic workflows.

✅ Key Advantages

- 📘 Declarative Graph Flow: Cleanly defines agent → tool → router logic using named nodes and typed state.

- 🔁 Loop-safe and Deterministic: Supports re-routing and iterative feedback loops based on real-time tool output.

- ⚙️ Tool Calling with Schema Validation: Leverages OpenAI-compatible tool metadata for structured interactions.

- 🧠 Memory-free Stateless Execution: Easily extendable for production use-cases where each run is isolated.

- 🌐 Works with Local or Cloud Models: Compatible with any OpenAI-style API (e.g., LM Studio, vLLM, Ollama).

- 🛡️ Secure by Design: Explicit tool validation avoids unsafe code execution (e.g., with `eval`).

🚀 Ready for Production: Best Practices

- 🔐 Security: Avoid direct

eval()in production. Use math parsers likesympyor sandboxed execution environments. - 📊 Observability: Integrate LangGraph with tracing tools (e.g., LangSmith, Prometheus, OpenTelemetry) to monitor node transitions and tool usage.

- 🧪 Testing: Unit test each node function independently (e.g., agent, router, tool) using mock state inputs.

- ⛓️ Concurrency: Use

async defversions of node functions and deploy with async web servers (e.g., FastAPI, Uvicorn). - 📁 Modular Design: Structure your graph flows in isolated files or classes to support pluggable workflows (e.g., per domain or user persona).

🔮 Future Enhancements

- 📚 Extend the agent with multiple tools, including web scraping, SQL, PDF, or vector search tools.

- 🧩 Integrate with a LangChain RAG stack for document Q&A with context-aware responses.

- 📈 Add visual DAG introspection dashboards with tools like

pyvis,graphviz, orstreamlit. - 🌍 Introduce multilingual support via translation tools or multilingual LLMs.

- 🗃️ Add persistent memory or long-term state across sessions using Redis, Pinecone, or Qdrant.

With LangGraph’s flexibility and local LLM serving (via LM Studio or vLLM), this architecture serves as a robust foundation for enterprise-grade automation, intelligent agent systems, and multimodal GenAI applications.

Reference Links

- LangGraph Framework:

- LM Studio & Local LLMs:

- LangChain Tools: