Model Context Protocol (MCP) with SSE Mode-Based PPT Generator

Model Context Protocol (MCP)

[ Revolutionizing AI Systems Integration ]

Get Started with MCP

Introduction: The Model Context Protocol (MCP) is an open, modular protocol designed to standardize how applications provide structured context to Large Language Models (LLMs). Much like USB-C unifies device connectivity across different hardware, MCP creates a unified interface for AI applications to interact with models, tools, and data sources in a clean, scalable, and interoperable manner. It simplifies the orchestration of context-aware workflows while enabling flexibility and consistency across diverse AI-driven use cases.

Why Choose MCP?

- Seamless Integration: MCP allows you to easily connect LLMs to various data sources, APIs, and computational tools, enabling dynamic, context-rich interactions.

- Model-Agnostic Architecture: You can effortlessly switch between different LLM providers (e.g., OpenAI, DeepSeek, LLaMA) without restructuring your application logic, thanks to standardized interfaces.

- Security & Best Practices: MCP encourages the use of modular resource access, logging, and separation of client-server responsibilities, supporting secure and observable data operations. This makes it easier to comply with data governance and audit requirements.

- Developer-Friendly: With reusable templates, modular tools, and clean abstractions, MCP reduces boilerplate and allows teams to focus on core functionality rather than infrastructure.

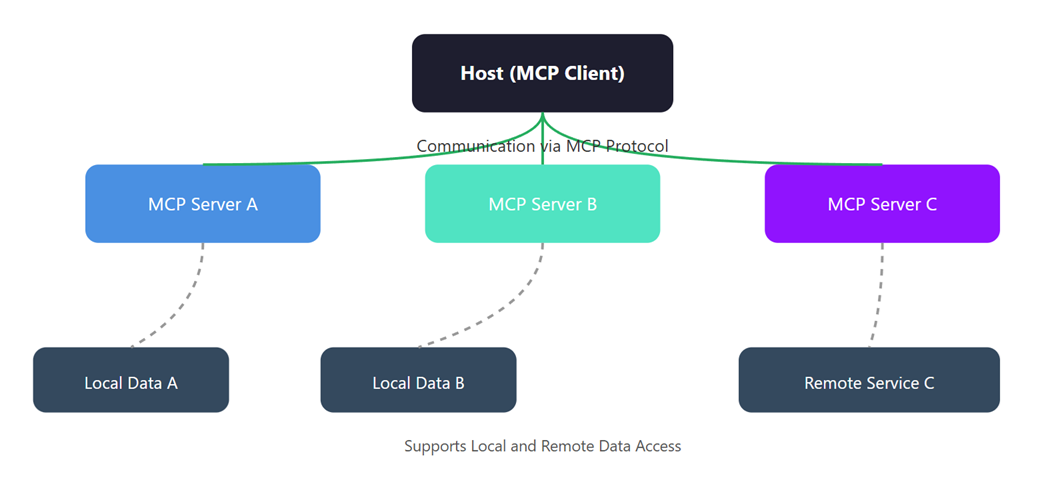

General Architecture

- MCP Hosts: These are the front-end applications or interfaces that consume data and services exposed via the MCP framework. Examples include web-based dashboards, data-driven visualizations, or AI-driven user experiences built using technologies like Streamlit, React, or custom frontend platforms. Hosts serve as the entry point for users to access AI tools and resources.

- MCP Clients: Each MCP Host communicates with its own MCP Client, typically in a one-to-one configuration. The client is responsible for managing the connection to the backend server, issuing requests (e.g., for tool execution or data retrieval), and handling responses. It acts as a thin layer that abstracts communication between the frontend and the server.

- MCP Servers: These are the core processing units in the architecture. MCP Servers expose and manage a variety of tools, prompt templates, and data resources. Each server is designed to handle requests from its paired client, execute appropriate logic, and return structured responses. They can operate locally or in distributed environments.

- Local/Remote Sources: These include external data endpoints or internal knowledge bases that MCP Servers interact with. Data can come from local file systems, remote APIs, vector databases, or other systems, and is accessed as needed by tools or resources to fulfill user requests.

Core Concepts of MCP

- Client Interfaces: These are the front-end applications through which users interact with the MCP system. They can be built using frameworks such as Streamlit for rapid prototyping, Flask for lightweight web APIs, or React for dynamic, user-friendly interfaces. Clients are responsible for capturing user input and displaying responses from the server.

- Server Logic: This is the backend layer that handles routing, coordination of modules, and execution of business logic. It acts as the control center, orchestrating the interaction between prompts, tools, and resources based on client requests.

- Prompt Templates: Structured input templates used to guide interactions with language models. They ensure consistency and improve the quality of generated outputs by defining standardized prompts for various tasks.

- Resources: Modular components or datasets that provide domain-specific knowledge or utilities to the system. These can include documents, embeddings, APIs, or any external systems the MCP needs to query or reference.

- Tools: Executable functions or integrations (e.g., LLM calls, data transformers, TTS engines) that perform specific tasks. Tools can be reused across prompts and workflows, enhancing modularity and flexibility.

- Logs: Structured logs are generated throughout the system to track inputs, outputs, errors, and performance metrics. These logs aid in debugging, monitoring, and auditing the MCP pipeline for traceability and observability.

Benefits of MCP

- Modularity: MCP enables a clean separation of concerns by organizing functionality into distinct, interchangeable modules. This improves maintainability and allows individual components to evolve independently.

- Reusability: Components developed within the MCP framework can be reused across multiple projects or use cases with minimal changes, accelerating development and reducing duplication of effort.

- Traceability: With well-defined interfaces and communication protocols, MCP makes it easy to track the flow of data and logic across the system, simplifying debugging and auditing.

- Extensibility: MCP’s plug-and-play architecture supports seamless integration of new tools, models, or services, enabling rapid adaptation to changing requirements without disrupting existing workflows.

- Observability: MCP encourages logging, monitoring, and metrics collection across components, offering better visibility into the system’s internal state and facilitating proactive issue resolution.

How MCP Enhances Generative AI

The Model Context Protocol (MCP) acts as a powerful abstraction layer that significantly augments the capabilities of generative AI systems. By defining structured interactions between clients, servers, tools, and data sources, MCP enables the development of intelligent, adaptive, and scalable AI-driven applications.

- Dynamic Tool Use: MCP enables LLMs to dynamically invoke and orchestrate external tools such as summarizers, translators, web scrapers, TTS engines, or custom APIs. This transforms LLMs from passive responders into proactive agents that can perform tasks intelligently by choosing the right tool for the context.

- Context-Aware Pipelines: With structured flows and persistent context tracking, MCP ensures that all tool invocations are informed by prior inputs, outputs, and state. This leads to more coherent responses, better memory across user sessions, and context-sensitive reasoning.

- Agentic Behavior: MCP facilitates the creation of agent-like behaviors in LLMs by enabling decision-making across multiple steps. LLMs can plan, execute, and adapt workflows by interacting with tools, updating context, and rerouting flows as needed.

- Multi-Modal Workflows: MCP supports seamless integration of text, image, audio, and potentially video-based tools, making it suitable for advanced multi-modal applications like AI-driven content creation, presentations, document analysis, or multilingual video generation.

- Composable Logic: By separating logic into modular units (Clients, Tools, Resources, Prompts, Logs), MCP encourages composability. Developers can reuse and remix components across applications, accelerating development cycles and ensuring consistency in large-scale systems.

Implementation of MCP-Based PowerPoint Generator

Overview

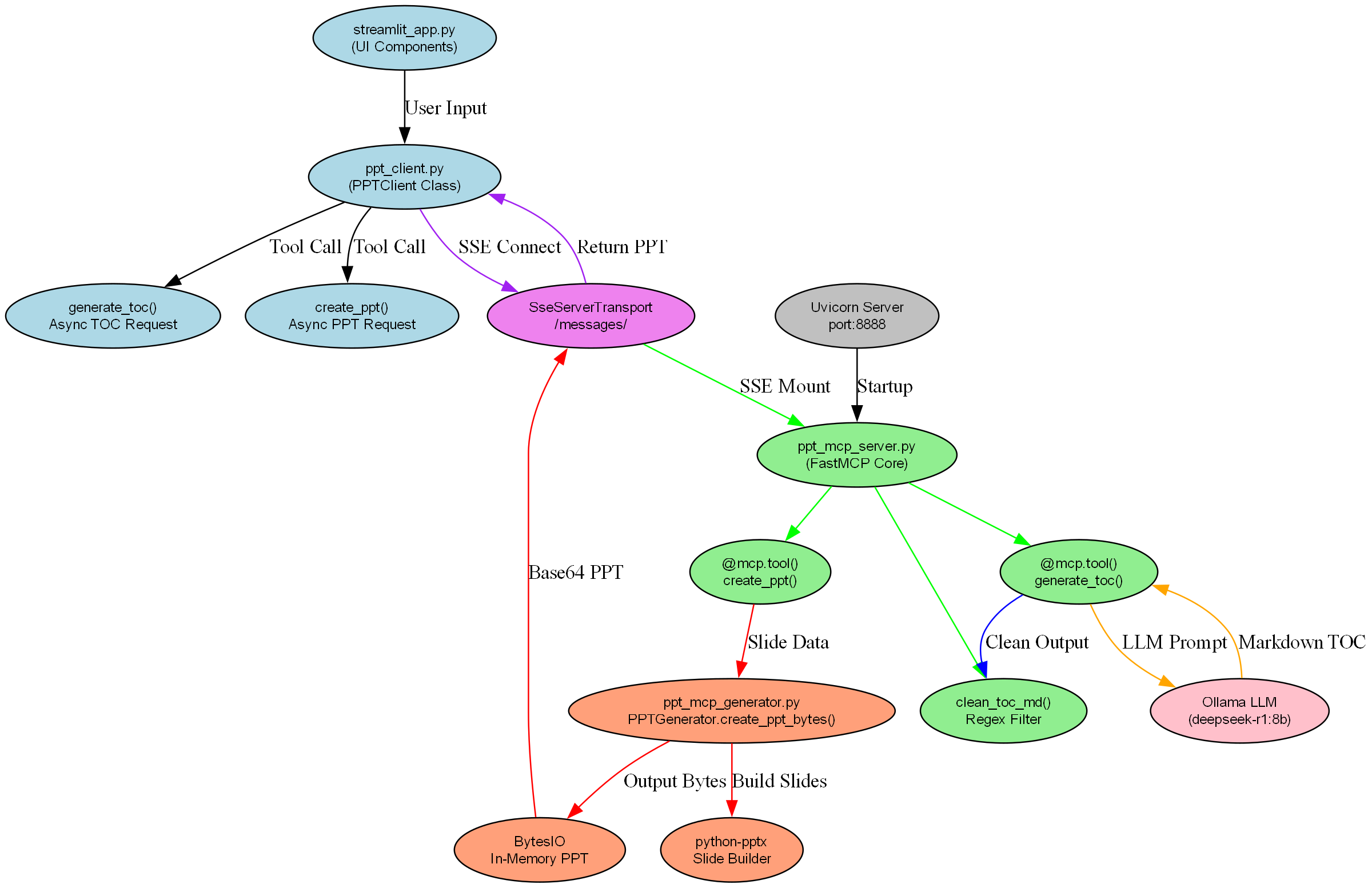

This system empowers users to generate professional PowerPoint presentations using a modular architecture built on the Model Context Protocol (MCP). It seamlessly integrates multiple components into a cohesive workflow that prioritizes flexibility, reusability, and performance. The key technologies and features include:

- Local LLM Deployment via Ollama (DeepSeek-R1): The system utilizes the DeepSeek-R1 language model hosted locally through Ollama. This provides fast, private, and cost-effective natural language generation without relying on external cloud APIs. DeepSeek-R1 is capable of generating concise slide content, headers, and summaries tailored to presentation topics.

- Interactive UI with Streamlit: A responsive and user-friendly web interface is built using Streamlit. This UI allows users to input topics, customize layout and fonts, upload images, and trigger presentation generation — all from the browser with no coding required.

- Real-Time Communication via Server-Sent Events (SSE): The backend and frontend communicate using SSE, enabling real-time streaming of slide generation progress and outputs. This improves the user experience by providing instant feedback and a smoother interaction flow.

MCP PowerPoint Generator - Flowchart

MCP PowerPoint Generator - Sequence Diagram

Complete Code Implementation

ppt_mcp_server.py: FastMCP server logic + tool registryppt_mcp_generator.py: Core PowerPoint generation logicppt_client.py: Streamlit UI client

ppt_mcp_server.py

"""

Copyright (c) 2025 AI Leader X (aileaderx.com). All Rights Reserved.

This software is the property of AI Leader X. Unauthorized copying, distribution,

or modification of this software, via any medium, is strictly prohibited without

prior written permission. For inquiries, visit https://aileaderx.com

"""

# =============================

# PPT MCP Server Implementation

# =============================

# ---- Import necessary libraries ----

from mcp.server.fastmcp import FastMCP

from starlette.applications import Starlette

from starlette.requests import Request

from starlette.routing import Route, Mount

from mcp.server.sse import SseServerTransport

import uvicorn

import logging

from langchain_ollama.chat_models import ChatOllama

import re

from pptx import Presentation

from pptx.util import Inches, Pt

from io import BytesIO

import base64

# ---- Configure logging ----

logging.basicConfig(

format="%(asctime)s - %(levelname)s - %(message)s",

level=logging.INFO

)

# ---- Initialize FastMCP server with a name ----

mcp = FastMCP("ppt-server")

# ---- LLM model setup using Ollama and DeepSeek ----

llm = ChatOllama(

model="deepseek-r1:8b",

base_url="http://127.0.0.1:11434"

)

# =============================================

# Utility: Clean raw markdown TOC from the LLM

# =============================================

def clean_toc_md(raw_toc):

# Remove blocks

cleaned_text = re.sub(r'.*? ', '', raw_toc, flags=re.DOTALL | re.IGNORECASE)

# Extract TOC starting from first numbered point (like 1.)

lines = cleaned_text.splitlines()

start_index = 0

for i, line in enumerate(lines):

if line.strip().startswith("1."):

start_index = i

break

cleaned_lines = lines[start_index:]

return "\n".join(cleaned_lines)

# ===============================================

# Tool: Generate TOC using LLM based on a topic

# ===============================================

@mcp.tool()

async def generate_toc(topic: str) -> str:

"""Generate table of contents for a given topic"""

try:

prompt = (

f"Generate text contents in PPT style for a PowerPoint presentation on: {topic}. "

"Output only content relevant to the topic along with titles."

)

response = llm.invoke(prompt)

return clean_toc_md(response.content)

except Exception as e:

logging.error(f"Error generating TOC: {str(e)}")

raise

# =====================================================

# Utility: Generate PowerPoint bytes from topic and TOC

# =====================================================

def create_ppt_bytes(topic, toc_md):

ppt = Presentation()

# Set slide size to 16:9

ppt.slide_width = Inches(13.33)

ppt.slide_height = Inches(7.5)

# Title Slide

title_slide_layout = ppt.slide_layouts[0]

slide = ppt.slides.add_slide(title_slide_layout)

slide.shapes.title.text = topic

# Process TOC lines into multiple slides

toc_lines = toc_md.splitlines()

lines_per_slide = 8

num_slides = (len(toc_lines) + lines_per_slide - 1) // lines_per_slide

for i in range(num_slides):

slide_layout = ppt.slide_layouts[1]

slide = ppt.slides.add_slide(slide_layout)

slide.shapes.title.text = f"Title-{i}" # Placeholder title

start = i * lines_per_slide

end = min((i + 1) * lines_per_slide, len(toc_lines))

content_text = "\n".join(toc_lines[start:end])

placeholder = slide.placeholders[1]

placeholder.text = content_text

# Attempt to auto-size text

try:

from pptx.enum.text import MSO_AUTO_SIZE

placeholder.text_frame.auto_size = MSO_AUTO_SIZE.TEXT_TO_FIT_SHAPE

except Exception:

pass

# Set font size for each line

for paragraph in placeholder.text_frame.paragraphs:

for run in paragraph.runs:

run.font.size = Pt(26)

# Save to memory buffer

buffer = BytesIO()

ppt.save(buffer)

return buffer.getvalue()

# ===================================================

# Tool: Create base64-encoded PPTX from topic and TOC

# ===================================================

@mcp.tool()

async def create_ppt(topic: str, toc_md: str, ppt_name: str) -> dict:

"""Generate PowerPoint file from given topic and TOC"""

try:

ppt_bytes = create_ppt_bytes(topic, toc_md)

return {

"filename": f"{ppt_name}.pptx",

"content": base64.b64encode(ppt_bytes).decode("utf-8")

}

except Exception as e:

logging.error(f"Error generating PPT: {str(e)}")

raise

# ==========================================================

# Setup Starlette app with SSE endpoint for FastMCP clients

# ==========================================================

def create_starlette_app():

# Create SSE transport channel

transport = SseServerTransport("/messages/")

# SSE connection handler

async def handle_sse(request: Request):

client_ip = request.client.host if request.client else "unknown"

logging.info(f"New PPT connection from {client_ip}")

# Handle bi-directional communication using MCP server logic

async with transport.connect_sse(

request.scope, request.receive, request._send

) as (read_stream, write_stream):

try:

await mcp._mcp_server.run(

read_stream,

write_stream,

mcp._mcp_server.create_initialization_options()

)

except Exception as e:

logging.error(f"Connection error: {str(e)}")

raise

# Mount routes and SSE handler

return Starlette(

routes=[

Route("/sse", endpoint=handle_sse),

Mount("/messages/", app=transport.handle_post_message),

]

)

# ======================

# Run the MCP PPT Server

# ======================

if __name__ == "__main__":

print("🟢 Starting PPT MCP Server on port 8888...")

app = create_starlette_app()

uvicorn.run(

app,

host="0.0.0.0",

port=8888,

log_config=None

)

ppt_mcp_generator.py

"""

Copyright (c) 2025 AI Leader X (aileaderx.com). All Rights Reserved.

This software is the property of AI Leader X. Unauthorized copying, distribution,

or modification of this software, via any medium, is strictly prohibited without

prior written permission. For inquiries, visit https://aileaderx.com

"""

from pptx import Presentation # Main class to create PowerPoint presentations

from pptx.util import Inches, Pt # Utilities for defining slide dimensions and font sizes

from io import BytesIO # Used to handle in-memory byte streams (no need to save to disk)

class PPTGenerator:

@staticmethod

def create_ppt_bytes(topic, toc_md):

# Create a new empty PowerPoint presentation

ppt = Presentation()

# Set the slide dimensions to 16:9 widescreen format

ppt.slide_width = Inches(13.33)

ppt.slide_height = Inches(7.5)

# -----------------------------------------------

# Add the Title Slide as the first slide in the deck

# -----------------------------------------------

title_slide_layout = ppt.slide_layouts[0] # Use the default title slide layout

slide = ppt.slides.add_slide(title_slide_layout) # Add a slide with the title layout

slide.shapes.title.text = topic # Set the title of the slide as the topic

# -----------------------------------------------

# Process the TOC markdown to create content slides

# -----------------------------------------------

# Split the TOC markdown string into a list of lines

toc_lines = toc_md.splitlines()

# Define how many TOC lines to display per slide

lines_per_slide = 8

# Calculate the total number of slides needed

num_slides = (len(toc_lines) + lines_per_slide - 1) // lines_per_slide

# Loop through and create each content slide

for i in range(num_slides):

slide_layout = ppt.slide_layouts[1] # Use "Title and Content" layout for content slides

slide = ppt.slides.add_slide(slide_layout) # Add a new slide with that layout

# Assign a placeholder title to each slide (this can be improved to reflect actual content)

slide.shapes.title.text = f"Title-{i}"

# Determine the slice of TOC lines for this slide

start = i * lines_per_slide

end = min((i + 1) * lines_per_slide, len(toc_lines))

content_text = "\n".join(toc_lines[start:end]) # Join lines with newline character

# Fill the content placeholder with the extracted TOC lines

placeholder = slide.placeholders[1] # Usually the second placeholder is the content area

placeholder.text = content_text

# -----------------------------------------------

# Optional: Auto-size the text to fit the placeholder

# -----------------------------------------------

try:

from pptx.enum.text import MSO_AUTO_SIZE

placeholder.text_frame.auto_size = MSO_AUTO_SIZE.TEXT_TO_FIT_SHAPE

except Exception:

# If auto-sizing fails (e.g., older pptx version), just ignore

pass

# -----------------------------------------------

# Set the font size for all text in the content box

# -----------------------------------------------

for paragraph in placeholder.text_frame.paragraphs:

for run in paragraph.runs:

run.font.size = Pt(26) # Set font size to 26pt for readability

# -----------------------------------------------

# Save the presentation to an in-memory byte stream

# -----------------------------------------------

buffer = BytesIO() # Create a byte stream buffer

ppt.save(buffer) # Save the PowerPoint into the buffer

# Return the raw byte content of the PowerPoint file

return buffer.getvalue()

ppt_client.py

"""

Copyright (c) 2025 AI Leader X (aileaderx.com). All Rights Reserved.

This software is the property of AI Leader X. Unauthorized copying, distribution,

or modification of this software, via any medium, is strictly prohibited without

prior written permission. For inquiries, visit https://aileaderx.com

"""

# Required imports

import streamlit as st # Streamlit for web interface

from mcp import ClientSession # MCP client session to interact with MCP server

from mcp.client.sse import sse_client # SSE client for streaming communication

import asyncio # For running asynchronous calls

from contextlib import AsyncExitStack # To manage async resource cleanup

import base64 # For decoding base64-encoded PPT data

import json # For parsing JSON responses

# -------------------------------------

# PPTClient class: Handles interaction with the MCP server

# -------------------------------------

class PPTClient:

def __init__(self, server_url):

self.server_url = server_url # MCP SSE server URL

self.session = None # Will hold the ClientSession

self.exit_stack = AsyncExitStack() # For proper async cleanup

# Connect to the MCP SSE server and initialize session

async def connect(self):

try:

# Start the stream context to establish SSE connection

self._streams_context = sse_client(url=self.server_url)

streams = await self._streams_context.__aenter__()

# Create and initialize an MCP session

self._session_context = ClientSession(*streams)

self.session = await self._session_context.__aenter__()

await self.session.initialize()

return True

except Exception as e:

st.error(f"Connection failed: {str(e)}")

return False

# Call the "generate_toc" tool on the MCP server

async def generate_toc(self, topic):

response = await self.session.call_tool("generate_toc", {"topic": topic})

if response.content:

return response.content[0].text # Return the markdown TOC string

return None

# Call the "create_ppt" tool with the topic, content, and filename

async def create_ppt(self, topic, toc_md, ppt_name):

response = await self.session.call_tool("create_ppt", {

"topic": topic,

"toc_md": toc_md,

"ppt_name": ppt_name

})

if response.content:

data = json.loads(response.content[0].text)

# Decode base64 file content and return filename and bytes

return data["filename"], base64.b64decode(data["content"])

return None, None

# Properly close the client session and stream context

async def disconnect(self):

if self._session_context:

await self._session_context.__aexit__(None, None, None)

if self._streams_context:

await self._streams_context.__aexit__(None, None, None)

# -------------------------------------

# Helper function to only generate TOC content

# -------------------------------------

async def generate_content(topic, server_url):

client = PPTClient(server_url)

if not await client.connect():

return None

toc_md = await client.generate_toc(topic)

await client.disconnect()

return toc_md

# -------------------------------------

# Helper function to generate PPT using topic and TOC

# -------------------------------------

async def generate_presentation(topic, toc_md, ppt_name, server_url):

client = PPTClient(server_url)

if not await client.connect():

return None, None

filename, ppt_bytes = await client.create_ppt(topic, toc_md, ppt_name)

await client.disconnect()

return filename, ppt_bytes

# -------------------------------------

# Streamlit UI Section

# -------------------------------------

# Web app title and subtitle

st.title("AI-Generated PowerPoint Creator")

st.subheader("Generate professional presentations using MCP framework")

# Input field for the MCP server URL

server_url = st.text_input("MCP Server URL", "http://localhost:8888/sse")

# Input field to type the presentation topic

topic = st.text_area("Enter Topic:", height=100)

# Button to generate the slide content (TOC markdown)

if st.button("Generate Content"):

if not topic.strip():

st.error("Please enter a topic")

else:

toc_md = asyncio.run(generate_content(topic, server_url))

if toc_md:

st.session_state.toc_md = toc_md # Store TOC in session state

st.success("Content generated!")

else:

st.error("Failed to generate content")

# Show editable TOC content if already generated

if "toc_md" in st.session_state:

edited_toc = st.text_area("Edit Content:", st.session_state.toc_md, height=200)

st.session_state.toc_md = edited_toc # Allow user to edit before generating PPT

# Input field to specify the output PowerPoint filename

ppt_name = st.text_input("PPT Name:", "MyPresentation")

# Button to generate and download the presentation

if st.button("Generate Presentation"):

if "toc_md" not in st.session_state or not st.session_state.toc_md.strip():

st.error("Please generate content first")

else:

filename, ppt_bytes = asyncio.run(generate_presentation(

topic, st.session_state.toc_md, ppt_name, server_url

))

if filename and ppt_bytes:

st.success("Presentation ready!")

# Display download button for the generated PPT file

st.download_button(

label=f"Download {filename}",

data=ppt_bytes,

file_name=filename,

mime="application/vnd.openxmlformats-officedocument.presentationml.presentation"

)

else:

st.error("Failed to generate presentation")

Pre-Requisites: [requirements.txt]

# Core server/client framework

fastapi>=0.100.0

uvicorn>=0.22.0

starlette>=0.32.0

# PowerPoint generation

python-pptx>=0.6.21

# LLM and LangChain integrations

langchain>=0.1.14

langchain-community>=0.0.30

langchain-ollama>=0.0.5

# Streamlit for client UI

streamlit>=1.32.0

# SSE and async client-server support

httpx>=0.27.0

aiohttp>=3.9.0

sse-starlette>=1.3.3

# General utilities

requests>=2.31.0

# Needed for encoding/decoding binary files

base58>=2.1.1

# Regular expressions (built-in, no need to install separately)

# re

# For packaging and I/O

aiofiles>=23.2.1

# Optional: for better logging and dev experience

rich>=13.7.0

To install all dependencies:

D:\ppt_generator>conda create -n mcp-sse python=3.13 -y

D:\ppt_generator>conda activate mcp-sse(mcp-sse) D:\ppt_generator>pip install -r requirements.txtHow to Run

-

Start the PPT MCP Server:

(mcp-sse) D:\ppt_generator>python ppt_mcp_server.py 🟢 Starting PPT MCP Server on port 8888... 2025-04-24 22:12:21,216 - INFO - Started server process [59852] 2025-04-24 22:12:21,217 - INFO - Waiting for application startup. 2025-04-24 22:12:21,218 - INFO - Application startup complete. 2025-04-24 22:12:21,218 - INFO - Uvicorn running on http://0.0.0.0:8888 (Press CTRL+C to quit) -

Launch the Streamlit Client App:

(mcp-sse) D:\ppt_generator>streamlit run ppt_client.pyAccess the UI in your browser:

- Local URL: http://localhost:8501

- Network URL:

(your local IP):8501

MCP with SSE Mode-Based PPT Generator Output

Download Generated PowerPoint

Conclusion and Future Enhancements

This MCP-based PowerPoint Generator showcases the power of the Model Context Protocol (MCP) in orchestrating and managing generative AI workflows in a modular and scalable manner. By structuring the application into well-defined components—such as tools, resources, prompts, and a FastAPI-based server—the system ensures clean separation of concerns and easy extensibility.

MCP serves as a robust backbone for generative AI systems by enabling context-driven interactions between clients and models. In this application, it effectively manages communication between the Streamlit-based UI (ppt_client.py) and the backend service (ppt_mcp_server.py), which coordinates LLM content generation, Qdrant-based image fetching, and PowerPoint creation. This results in a highly configurable AI assistant capable of generating full-fledged presentations with minimal user input.

The benefits of using MCP in generative applications include:

- 🔁 Reusability of components like tools and prompts across applications

- ⚙️ Seamless orchestration of multiple AI services (e.g., LLMs, vector DBs, image processors)

- 🧩 Extensibility for integrating new features like translation, multilingual TTS, or voice cloning

- 📦 Clear modular architecture that supports maintainability and testing

Future Enhancements may include:

- 📶 Real-time collaboration features or remote triggering of generation via APIs

- 🧠 Plug-and-play support for different LLM backends (e.g., OpenAI, vLLM, Ollama)

- 📊 Analytics on presentation usage, user preferences, or slide quality scoring

Overall, this project not only demonstrates a practical implementation of MCP in a generative AI setting but also lays the groundwork for more advanced and domain-specific applications. It is a step forward in building intelligent, modular systems that can evolve with the rapid pace of AI development.

Reference Links

- Core Frameworks & Libraries:

- Model Context Protocol: GitHub

- Model Context Protocol: Introduction

- Model Context Protocol: MCP Python SDK

- FastMCP: GitHub

- Starlette: Documentation

- Langchain: GitHub

- Uvicorn: Documentation

- Model Resources:

- DeepSeek: Model Card

- Ollama: GitHub

- Tools & Utilities:

- Pptx (python-pptx): Documentation

- AsyncIO: Documentation

- Streamlit: Official Site